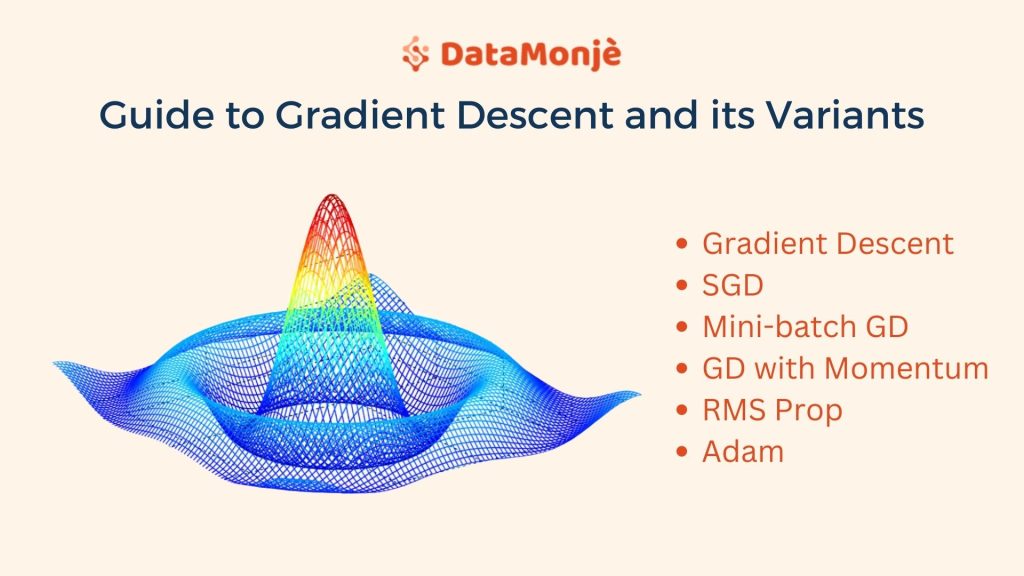

Guide to Gradient Descent: Working Principle and its Variants

One of the core ideas of machine learning is that it learns via optimization. Gradient descent is an optimization algorithm that learns iteratively. Here gradient refers to the small change, and descent refers to rolling down. The idea is, the error or loss will roll down with small steps, eventually reaching the minimum loss. The … Guide to Gradient Descent: Working Principle and its Variants Read More »...

A Beginner’s Guide to Loss functions for Classification Algorithms

Welcome to the second part of the Complete Guide to Loss Functions. Previously we had studied the Regression Losses in depth. In this blog, we will go through the details of the Classification Loss Functions. Classification algorithms like Logistic Regression, SVM (Support Vector Machines), Neural Networks use a specific set of loss functions for the … A Beginner’s Guide to Loss functions for Classification Algorithms Read More »...

A Beginner’s Guide to Loss functions for Regression Algorithms

A Beginner’s Guide to Loss functions for Regression Algorithms An in-depth explanation for widely used regression loss functions like mean squared error, mean absolute error, and Huber loss. Loss function in supervised machine learning is like a compass that gives algorithms a sense of direction while learning parameters or weights. This blog will explain the … A Beginner’s Guide to Loss functions for Regression Algorithms Read More »...

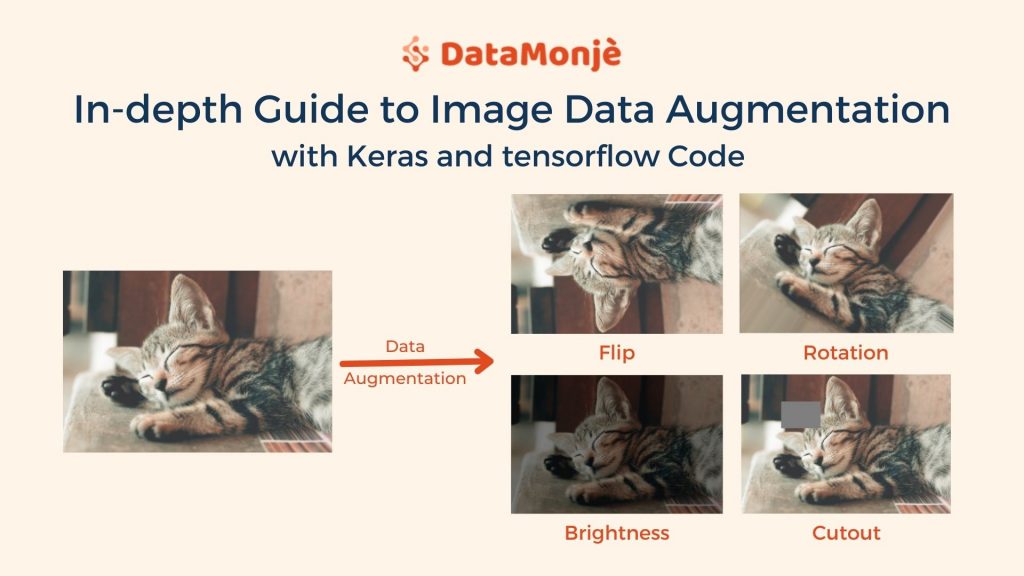

Guide to Image Data Augmentation: from Beginners to Advanced [tensorflow + Keras]

Image augmentation is an engineered solution to create a new set of images by applying standard image processing methods to existing images.

This solution is mostly useful for neural networks or CNN when the training dataset size is small. Although, Image augmentation is also used with a large dataset as regularization technique to build a generalized or robust model.

Deep learning algorithms are not powerful just because of their ability to mimic the human brain. They are also powerful because of their ability to thrive with more data. In fact, they require a significant amount of data to deliver considerable performance.

High performance with a small dataset is unlikely. Here, an image data augmentation technique comes in handy when we have small image data to train an algorithm...

This solution is mostly useful for neural networks or CNN when the training dataset size is small. Although, Image augmentation is also used with a large dataset as regularization technique to build a generalized or robust model.

Deep learning algorithms are not powerful just because of their ability to mimic the human brain. They are also powerful because of their ability to thrive with more data. In fact, they require a significant amount of data to deliver considerable performance.

High performance with a small dataset is unlikely. Here, an image data augmentation technique comes in handy when we have small image data to train an algorithm...